Introduction

In the field of research, evaluation, and clinical practice, interrater reliability plays a crucial role in ensuring consistency and accuracy when multiple observers or raters are involved in assessing the same subject or data. This statistical measure assesses the level of agreement between different raters when they apply the same criteria to evaluate or classify something. High interrater indicates that different raters reach similar conclusions, enhancing the credibility of the data. Whether used in psychological testing, medical diagnosis, or educational grading, achieving consistent results across raters is essential to maintaining the reliability of studies and decisions.

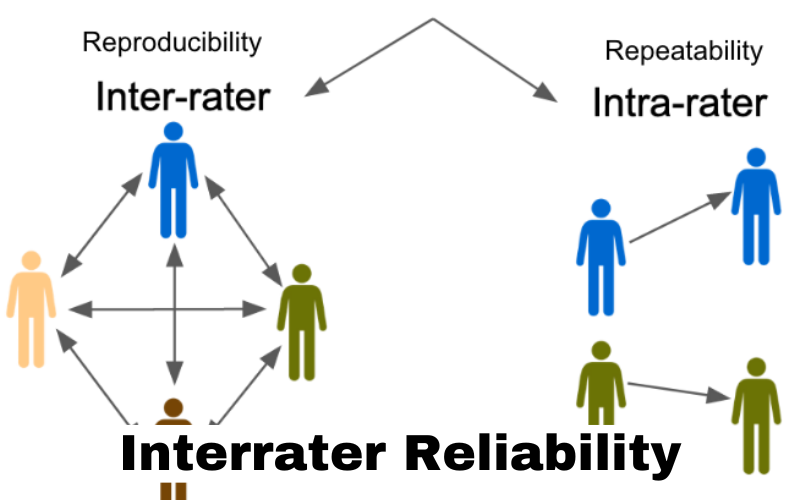

What is Interrater Reliability?

Interrater reliability is a statistical measure used to determine how consistently two or more independent raters agree in their observations or judgments. It reflects the extent to which subjective assessments are reproducible across different evaluators.

In settings such as clinical diagnosis, performance appraisals, or content analysis, different individuals often assess the same data. Discrepancies between their ratings can introduce biases, distort outcomes, and undermine confidence in the results. By calculating, researchers can quantify the degree of agreement and take steps to enhance rater consistency.

Why is Interrater Reliability Important?

Ensuring strong interrater reliabilit is essential in fields where subjective judgment influences outcomes. Inconsistent ratings between raters can compromise data validity, reduce confidence in findings, and introduce bias. Below are some key reasons why is important:

- Enhances Data Quality: Reliable assessments ensure more consistent, credible data.

- Reduces Bias: Helps eliminate subjectivity and personal bias among raters.

- Increases Study Replicability: Research findings become more reproducible if interrater consistency is maintained.

- Improves Training Quality: High agreement reflects successful training and clear criteria for assessment.

Without strong interrater reliability, even well-designed research can suffer from inconsistencies that weaken conclusions.

Types of Interrater Reliability

There are several ways to measure interrater reliability, depending on the nature of the data being collected. Common types include:

1. Percent Agreement

The simplest method, where agreement is calculated as the percentage of cases where all raters provide the same rating. While intuitive, it can overestimate reliability when there are few rating categories.

2. Cohen’s Kappa

Cohen’s Kappa adjusts for chance agreement, providing a more robust measure for categorical data. It ranges from -1 to +1, where higher values indicate stronger agreement.

3. Intraclass Correlation Coefficient (ICC)

The intraclass correlation coefficient measures agreement for continuous data. It accounts for both correlation and absolute agreement between raters, making it ideal for quantitative assessments.

4. Fleiss’ Kappa

When more than two raters are involved, Fleiss’ Kappa can evaluate agreement across multiple raters, adapting the kappa statistic for larger groups.

5. Gwet’s AC1

This method is useful when raters rarely agree by chance, helping to counteract the limitations of Cohen’s Kappa in cases of skewed data.

Factors Affecting Interrater Reliability

Several factors influence making it critical for researchers to control these variables:

1. Rater Training

Raters must receive comprehensive training to ensure they understand assessment criteria and apply them consistently.

2. Clear Rating Scales

Vague or poorly defined criteria increase subjective interpretation, reducing agreement. Clear operational definitions are essential.

3. Complexity of the Task

Simpler tasks tend to yield higher, while complex, nuanced assessments leave more room for differing interpretations.

4. Number of Raters

More raters generally increase reliability, but only if all raters are trained consistently.

5. Data Characteristics

Data that is inherently ambiguous or open to interpretation often produces lower.

How to Measure Interrater Reliability

The process of calculating depends on the type of data and the specific method selected. Below is a step-by-step outline for common approaches:

Step 1: Define Criteria

Ensure all raters use identical criteria with clearly defined scoring rules.

Step 2: Conduct Independent Ratings

Raters evaluate the same set of data independently.

Step 3: Choose the Appropriate Metric

- Use Cohen’s Kappa for two raters evaluating categorical data.

- Use Intraclass Correlation Coefficient (ICC) for continuous data with multiple raters.

- Use Fleiss’ Kappa for more than two raters evaluating categorical data.

Step 4: Analyze the Results

The output will typically be a reliability coefficient between 0 and 1, with values closer to 1 indicating stronger agreement.

Best Practices to Improve Interrater Reliability

Enhancing interrater reliability requires both procedural and analytical improvements:

- Develop Clear Guidelines: Detailed scoring rubrics and operational definitions limit subjective interpretation.

- Train Raters Regularly: Initial training combined with periodic refreshers helps maintain consistent application of criteria.

- Conduct Calibration Exercises: Before official data collection, raters should practice on sample data and compare results.

- Monitor Rater Drift: Over time, raters may deviate from initial criteria. Regular monitoring helps catch and correct this drift.

- Use Multiple Raters: Combining ratings from multiple raters reduces individual biases and enhances reliability.

Applications of Interrater Reliability

1. Healthcare

In medical diagnostics, ensures that different clinicians provide consistent diagnoses based on the same symptoms.

2. Psychology and Psychiatry

In clinical assessments, high helps ensure consistent diagnosis of psychological disorders across different practitioners.

3. Academic Research

Social science studies often rely on subjective data coding, where high interrater reliability enhances research credibility.

4. Performance Appraisal

In corporate environments, consistent performance evaluations across managers reflect good interrater reliability, enhancing fairness.

Common Challenges in Achieving High Interrater Reliability

Even with rigorous preparation, maintaining high can be challenging. Common obstacles include:

- Ambiguous Criteria: Subjective or poorly defined categories leave room for interpretation.

- Rater Bias: Personal beliefs, preferences, or prior experiences can influence ratings.

- Fatigue: Long rating sessions increase error rates as raters lose focus.

- Changing Contexts: Evolving definitions or criteria over time can lower reliability.

See Also: Pusoy Dos

FAQ’s

Q1. What does interrater reliability mean?

Interrater reliability refers to the degree of agreement between two or more raters evaluating the same data independently.

Q2. Why is interrater reliability important in research?

It ensures that results are consistent across different evaluators, boosting credibility and reducing bias.

Q3. What is a good interrater reliability score?

A score above 0.75 is generally considered good, with values closer to 1 indicating excellent reliability.

Q4. How is interrater reliability calculated?

Depending on data type, metrics like Cohen’s Kappa, Intraclass Correlation Coefficient, or Fleiss’ Kappa can be used.

Q5. What factors reduce interrater reliability?

Inconsistent training, vague criteria, complex tasks, and rater bias can lower interrater reliability.

Q6. Can interrater reliability be improved?

Yes, with clear guidelines, thorough training, calibration sessions, and regular quality checks, can be significantly improved.

Conclusion

Interrater reliability is essential in any field that requires multiple observers to evaluate data or performance. It ensures consistency, credibility, and reproducibility of results. By understanding what interrater reliability is, why it matters, and how to measure and improve it, researchers, clinicians, educators, and businesses can enhance the quality of their data and decisions. High interrater reflects a well-trained team, clear criteria, and a robust evaluation process. As subjective assessments become increasingly common in fields like healthcare, psychology, education, and corporate environments, investing in strong practices ensures fair, accurate, and credible evaluations.

One thought on “Interrater Reliability: Definition, Importance, Methods, and Best Practices for Consistent Ratings”